Duplicity: execute a full backup once a week, an incremental snapshot all other days of the week. Compression is enabled by default, encryption is not currently supported. It supports only storage backend which can be mounted on a local directory. Used also for the single backup. Restic: always execute incremental backups using file deduplication. Duplicity supports encrypted backups to many cloud storage vendors including Google, Amazon S3, Backblaze B2, Azure etc. As an example, to configure a simple backup of a specific directory to AWS S3 perform the following. I updated my Duplicity backups script for my Linux systems. I use different backup destinations for each server. Home servers backup to my main home server via sftp. My main home server and my AWS host backup to Backblaze B2 cloud, which is one of the most affordable solutions out there, when combined with Duplicity Incremental backups.

- Portland, Oregon

| import sequence |

| class Agi: |

| def Background(self, string): print(string) |

| def SendDTMF(self, string): print(string) |

| def wait_for_digit(self, timeout): return input('Enter key:') |

| agi_o = Agi() |

| #sequence.play_sequence_prefix(agi_o, ['1','2','3','4','5','6'], 3) |

| #!/bin/sh -x |

| # XXX script should fail if any command does |

| # duplicity restore --file-to-restore [folder name from backup] b2://[keyID]:[application key]@[B2 bucket name] [restore path] |

| # folder examples: mhap, / |

| BUCKET=$1 |

| LOCAL_DIR=$2 |

| #!/usr/bin/python |

| '' |

| '' |

| importeventlistenerconf |

| importtime |

| importlogging |

| importsys |

| #import json |

| '' |

| Devsitesoftendon't have proper certificates - right now, I'mworkingwith |

| expiredcertswithincorrecthostnames. WorkaroundsforusingSeleniumwith |

| certscanbehardtofind, especiallyforthePythonwebdriver (Selenium2) |

| bindings, whichhavegonethoughmanychangesrecently. |

| Onewayaroundthisistocreateabrowserprofile, butthishasitsown |

| hassles, especiallyifyouwantyourtestsuitetoworkonabuildboxandthe |

| boxofanydevwhowantstocheckitoutandrunit. |

| #!/usr/bin/env python |

| '' |

| Environmenthelperforcram. |

| Proofofconcept, wouldbebetterimplementedinsidecram.py, |

| wherewewouldn'thavetowriteatempfile; inthatcase, wouldprobablywant |

| tospecifyaprefixsowecouldrefertohistory. |

| Exampleusage: |

| Normalusage-theoutputisnotregexpedforclarity, butpinandtxid |

Published on Mar 7, 2018

Topic: Tutorial

Lately, I've been looking into backups a lot. At one point, I even looked into setting up aTime Machine Server on my FreeBSD machine.That said, it's all been a headache that sometimes works and sometimes doesn't. Now, however, I'vediscovered Duplicity, a GPL software package, powered by python,that is feature-heavy, simple, reliable, and fast.

Features of Duplicity

- Relatively easy to automate

- Encrypts your backups by default

- Compresses your backups by default

- Takes incremental backups

- Can self-heal if a backup goes wrong

- Allows incremental restoration from backups

- Supports a lot of different protocols

- Supports AWS S3 very well by default.

Guitar chords smoke on the water. A list of duplicity's supported protocols:

- acd_cli

- Amazon S3

- Backblaze B2

- Copy.com

- DropBox

- ftp

- GIO

- Google Docs

- Google Drive

- HSI

- Hubic

- IMAP

- local filesystem

- Mega.co

- Microsoft Azure

- Microsoft Onedrive

- par2

- Rackspace Cloudfiles

- rsync

- Skylabel

- ssh/scp

- SwiftStack

- Tahoe-LAFS

- WebDAV

Security Considerations

Backups are a potentially huge security liability, because they can contain a lot of sensitiveinformation. Lots of times we want to back up information because it is sensitive. So we need toconsider the ramifications of our backup system. In particular, there are three systems we need toconsider when using duplicity.

- The source machine

- Data in transit

- The backup server

Part of the key to ensuring good security is having sensible assumptions about the state andsecurity of your system. These assumptions depend on the nature of the machines in use, the natureof the network, and whether you're using full disk encryption on the source and target machines.Here are some assumptions I'm working with, if they're not true for you, then adjust your securitymeasures appropriately.

- I am using duplicity's built-in PGP encryption. This protects the data on the target machine and in-transit.

- Access to the source and target machines is restricted.

These two assumptions allow a generous amount of leeway in how we handle things. That said, they'rereally a bare minimum for confidentiality, and do not necessarily guarantee integrity. Using asecure transit protocol, like SFTP or S3, solves many of these problems. If you're using FTP overthe internet for example, you're wide-open to a man-in-the-middle attack. For that reason, Irecommend using a protocol that is already encrypted and password-protected.

Basic Duplicity Usage

I have a script template that I use for my duplicity backups. I currently use a cloud solution forfile synchronization and offsite backups, and duplicity for larger local backups of size up toabout 200GB. My ISP has a data limit, so backing up to S3 would be impractical. Another thing toconsider if you're considering using S3 is cost – S3 costs $0.023 per gigabyte per month, whichgets expensive. If you want offsite backups, here's a quick price comparison.

| Service | Storage | Price/GB/month | Price/month |

|---|---|---|---|

| AWS S3 | 15GB | $0.023 | $0.34 |

| AWS S3 | 50GB | $0.023 | $1.30 |

| AWS S3 | 100GB | $0.023 | $2.30 |

| AWS S3 | 200GB | $0.023 | $4.60 |

| AWS S3 | 500GB | $0.023 | $11.50 |

| AWS S3 | 1TB | $0.023 | $23.00 |

| AWS S3 | 10TB | $0.023 | $230.00 |

| Google Drive | 15G | Free | $0 |

| Google Drive | 100GB | $0.0199 | $1.99 |

| Google Drive | 1TB | $0.0100 | $9.99 |

| Google Drive | 10TB | $0.0100 | $99.99 |

| DropBox | 1TB | $0.00825 | $8.25 |

| OneDrive | 50GB | $0.398 | $1.99 |

| OneDrive | 1TB | $0.00699 | $6.99 |

This script is designed to be relatively portable for different protocols and machines.You may need some adjustments (for example, for the service authentication, or for some protocols'sensitivities to file paths or hosts). Notably, this won't work for the s3:// protocol because itdoesn't have access to AWS credentials, and it won't work for the file:// protocol because itforces you to use a hostname and port. These are relatively easy adjustments though.

Fetching Duplicity Info

Using the same variables as above:

duplicity collection-status ${backup_dest} lists the backups that are available.

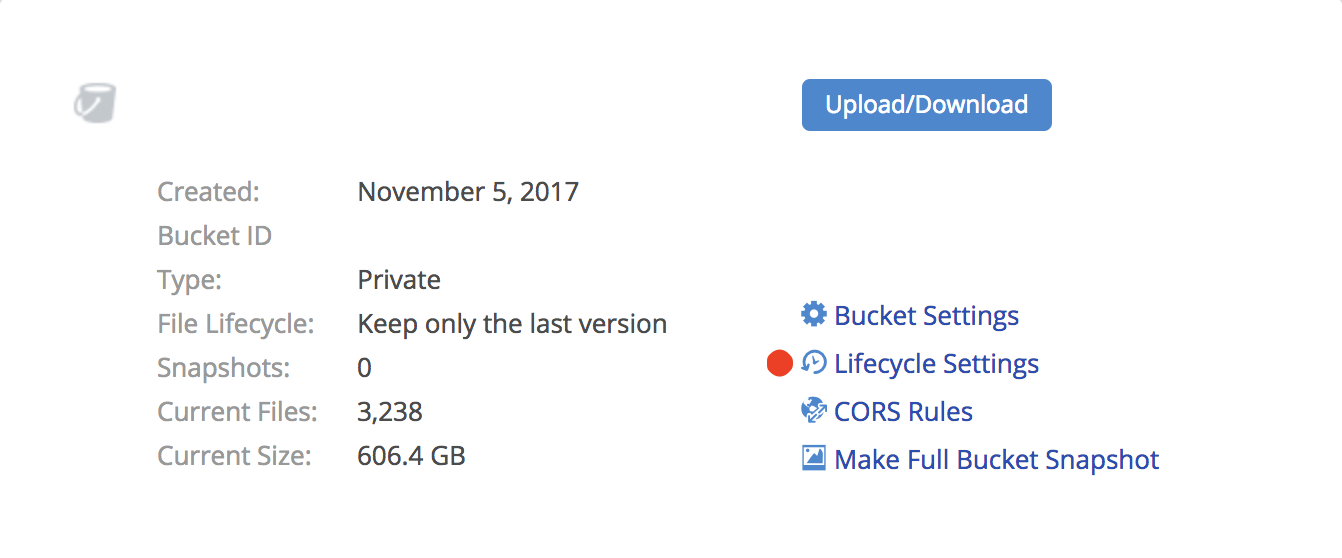

Duplicity Backblaze B2

duplicity restore ${backup_dest} ./local-folder will restore the entire backup to the givenfolder. Be aware that if the local folder is not empty, duplicity will throw an error.

duplicity restore -t 1D --file-to-restore specific_file.txt ${backup_dest} restored-specific-file.txtwill restore a single specific_file.txt to the state it was in one day ago, and put the result inrestored-specific-file.txt in the current directory.

- acd_cli

- Amazon S3

- Backblaze B2

- Copy.com

- DropBox

- ftp

- GIO

- Google Docs

- Google Drive

- HSI

- Hubic

- IMAP

- local filesystem

- Mega.co

- Microsoft Azure

- Microsoft Onedrive

- par2

- Rackspace Cloudfiles

- rsync

- Skylabel

- ssh/scp

- SwiftStack

- Tahoe-LAFS

- WebDAV

Security Considerations

Backups are a potentially huge security liability, because they can contain a lot of sensitiveinformation. Lots of times we want to back up information because it is sensitive. So we need toconsider the ramifications of our backup system. In particular, there are three systems we need toconsider when using duplicity.

- The source machine

- Data in transit

- The backup server

Part of the key to ensuring good security is having sensible assumptions about the state andsecurity of your system. These assumptions depend on the nature of the machines in use, the natureof the network, and whether you're using full disk encryption on the source and target machines.Here are some assumptions I'm working with, if they're not true for you, then adjust your securitymeasures appropriately.

- I am using duplicity's built-in PGP encryption. This protects the data on the target machine and in-transit.

- Access to the source and target machines is restricted.

These two assumptions allow a generous amount of leeway in how we handle things. That said, they'rereally a bare minimum for confidentiality, and do not necessarily guarantee integrity. Using asecure transit protocol, like SFTP or S3, solves many of these problems. If you're using FTP overthe internet for example, you're wide-open to a man-in-the-middle attack. For that reason, Irecommend using a protocol that is already encrypted and password-protected.

Basic Duplicity Usage

I have a script template that I use for my duplicity backups. I currently use a cloud solution forfile synchronization and offsite backups, and duplicity for larger local backups of size up toabout 200GB. My ISP has a data limit, so backing up to S3 would be impractical. Another thing toconsider if you're considering using S3 is cost – S3 costs $0.023 per gigabyte per month, whichgets expensive. If you want offsite backups, here's a quick price comparison.

| Service | Storage | Price/GB/month | Price/month |

|---|---|---|---|

| AWS S3 | 15GB | $0.023 | $0.34 |

| AWS S3 | 50GB | $0.023 | $1.30 |

| AWS S3 | 100GB | $0.023 | $2.30 |

| AWS S3 | 200GB | $0.023 | $4.60 |

| AWS S3 | 500GB | $0.023 | $11.50 |

| AWS S3 | 1TB | $0.023 | $23.00 |

| AWS S3 | 10TB | $0.023 | $230.00 |

| Google Drive | 15G | Free | $0 |

| Google Drive | 100GB | $0.0199 | $1.99 |

| Google Drive | 1TB | $0.0100 | $9.99 |

| Google Drive | 10TB | $0.0100 | $99.99 |

| DropBox | 1TB | $0.00825 | $8.25 |

| OneDrive | 50GB | $0.398 | $1.99 |

| OneDrive | 1TB | $0.00699 | $6.99 |

This script is designed to be relatively portable for different protocols and machines.You may need some adjustments (for example, for the service authentication, or for some protocols'sensitivities to file paths or hosts). Notably, this won't work for the s3:// protocol because itdoesn't have access to AWS credentials, and it won't work for the file:// protocol because itforces you to use a hostname and port. These are relatively easy adjustments though.

Fetching Duplicity Info

Using the same variables as above:

duplicity collection-status ${backup_dest} lists the backups that are available.

Duplicity Backblaze B2

duplicity restore ${backup_dest} ./local-folder will restore the entire backup to the givenfolder. Be aware that if the local folder is not empty, duplicity will throw an error.

duplicity restore -t 1D --file-to-restore specific_file.txt ${backup_dest} restored-specific-file.txtwill restore a single specific_file.txt to the state it was in one day ago, and put the result inrestored-specific-file.txt in the current directory.

duplicity list ${backup_dest} will list all of the files that were backed up.

Duplicity Backblaze

Many of the options, like -t (restore time), can be used for various commands.

In Closing

Duplicity is a very viable, effective, free, and portable backup solution. It does have itsdownsides (single-threaded file access, currently somewhat inefficient) but for most people thoseissues won't matter. I use it personally because of its backups and efficiency.